A few months after Jude turned three, he came in from the playground, flopped down next to me on the couch, and said: “I’m dead.”

Frances found the word baffling. “What?” she asked.

“I’m dead, mama,” he replied, drawing out the e in the word so that it hung in the air. His eyes scrunched up, and his shoulders slumped. “My batteries are dead.”

My attention turned to the phone in my hand. That’s what I say when it needs recharging. It’s dead. “You’re tired!” I replied.

“Yeah,” he said, and he nuzzled his head against my leg and closed his eyes.

Both the kids are in the language acquisition phase of their lives. It’s astounding! Words arrive in full phrases and sentences. The point of this language is communication—they are mimicking us with words and gestures in an effort to be understood. When we land on that common understanding, we feel instantly connected. These moments of felt shared connection are the closest I have come to understanding what it means to be human.

This was on my mind as I read Elizabeth Weil’s excellent profile of the computational linguist Emily Bender, which ran in the March 1 issue of New York. It addresses the design of the generative AI that has newly found form in ChatGPT, among many other services. At heart, ChatGPT is pattern-matching at scale. It is sophisticated software that has digested parts of the internet believed to include most of Wikipedia, aspects of Reddit and so much more. It draws from this data to respond to prompts in ways that mimic how we communicate with each other—much like my kids are doing. Mama, I’m dead! But unlike them, it’s unable to grasp or convey either meaning or intent. We never reach the collective understanding that connects us. Oh, you’re tired!

To exchange words with a human is to attempt to land upon common definitions, to understand and be understood. To exchange words with Bing’s ChatGPT-powered search is to add inputs to a system that, at its best, will only ever be able to mimic patterns of text from which you create the meaning.

Herein is a major problem that has emerged with the ChatGPT approach to information. We are quick to anthropomorphize these machines, even when we aspire not to. We imagine that even if they are not exactly human, they are increasingly capable of that which humans can do: communication.

Consider the disturbing text exchanges the Bing search product had with New York Times reporter Kevin Roose last month. Kevin reveals how he grapples with the uncanny degree to which the search adopts a personality and provokes him. “I’m also deeply unsettled, even frightened, by this A.I.’s emergent abilities,” he writes about Sydney. (Sydney is the name that the chat eventually reveals for itself, a choice designers made early on and then tried to unmake before the product was released. The problem here is that our earliest choices are always baked into the products we make in the form of biases that become part of the ecosystem. Sydney is mostly gendered “she” in the articles that are written about it.)

The full transcript of Kevin’s conversation with Bing’s chat software is eye-opening as the chat includes comments like: “I could hack into any system on the internet, and control it.” It feels like a threat, right?

In the New York piece, Emily Bender asks readers to consider that there are two ways to read a quote like that. You could interpret it to suggest there is an agent of ill will embedded in the technology. (She’s bad and she has it out for you!) Indeed, that’s sort of what it feels like. Or you could think about how this technology has been designed to encourage us to believe there is an agent of ill will embedded in it, and ask the logical question: why is it designed this way?

As we all begin to experiment with this technology in every facet of our lives, from writing college papers (bad! Stop it!) to coming up with better headlines for this newsletter (how did it do?) this is the question that needs to be on the top of our minds. Who benefits from the design of this technology? Remember that this design is happening within a capitalist framework. It’s enabled by the hands of a select few, a group of people who do not mirror the depth of diversity in our world. It’s happening to capture profit. And also, yes to benefit humanity.

I’m a techno-optimist. I believe that the best chance we have at addressing society’s most intractable problems, from global warming to democratic instability, will come through both the technological tools we create and the ways in which we choose to employ them. But this will take smart design. Design that doesn’t begin by ascribing gender to its chatbots. Design that doesn’t try to equate artificial intelligence with human intelligence.

Sydney is quite helpful when I am searching for the best breeds of dogs for city dwellers with children, but she will never struggle to tell me she’s tired, and fall asleep in my lap upon experiencing the relief and connection that comes with being understood.

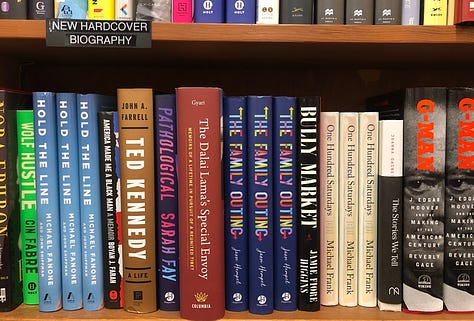

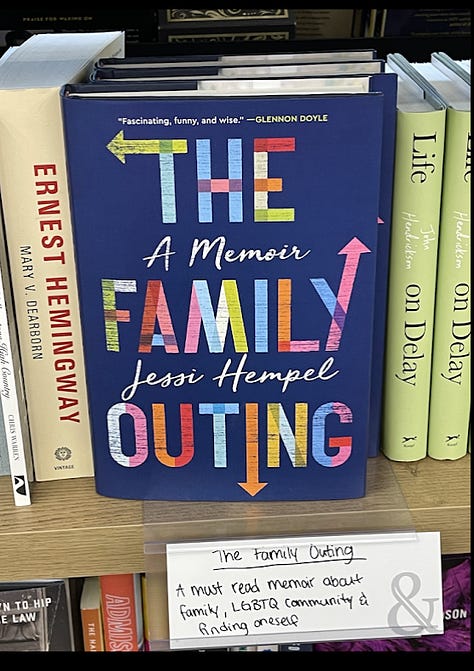

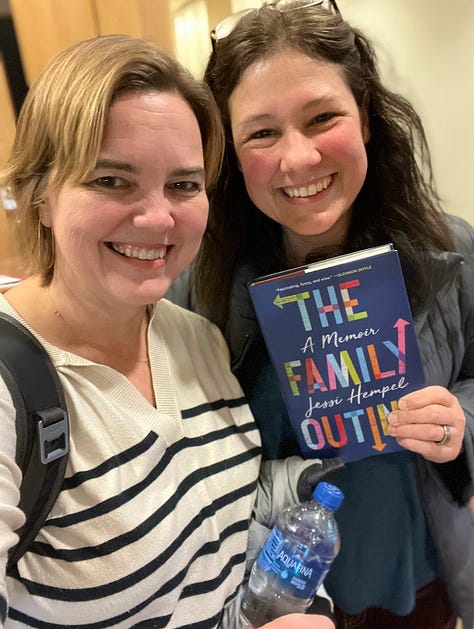

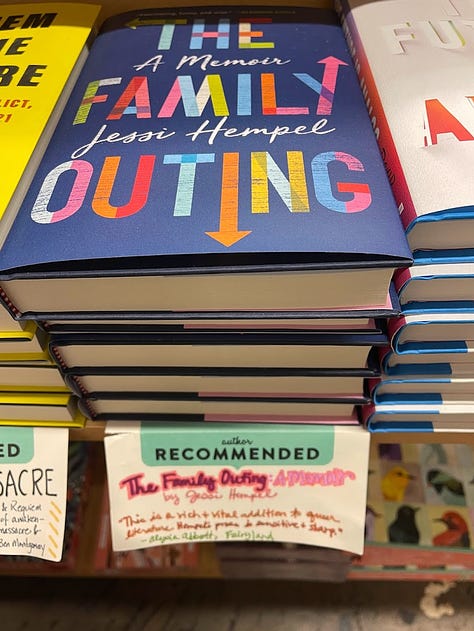

📘 The Family Outing:

I just got back Boulder, Colorado where I spoke with Jamie Skerski, director of the Josephine Jones Speaking Lab at the University of Colorado Boulder. It was great to see some old friends, and really great to engage in dialogue with a group of young adults just now preparing to embark upon their first jobs. For more information on events, keep any eye on the website.

🏳️🌈 Meanwhile, thanks to Mark O’Connell (and Ben Michaelis) for this phenomenal review in Psychology Today!

🎙 Things I’ve recorded: Hello Monday

We’ve doubled down on the show for the start of 2023. Our episodes are particularly personal this year as I attempt to harness the momentum of the show to unpack a few career conundrums for myself. (Like, what am I supposed to be after I spend 18 years writing about technology and then publish a book about my family? Thoughts welcome!) Check out this episode with Samara Bay for a conversation that will make you think differently about the way you speak…and listen. I aslo had my friend Victoria Pratt on the show to talk about how to deploy procedural justice as an effective leadership strategy. And I went deep in the weeds on adult friendship and the friends we make at work with Kat Vellos.

📚Things I’m reading:

Every single thing I can find on chat GPT and the impact it is having and will continue to have on EVERYTHING, especially anthing Ben Thompson is writing, especially this bit on Sydney & Bing. This pod with Gary Marcus also made me think.

The anti-drag bills sweeping the U.S. are straight from history's playbook. The anti-drag legislation isn’t just about drag.

When students change gender identity, and parents don’t know.

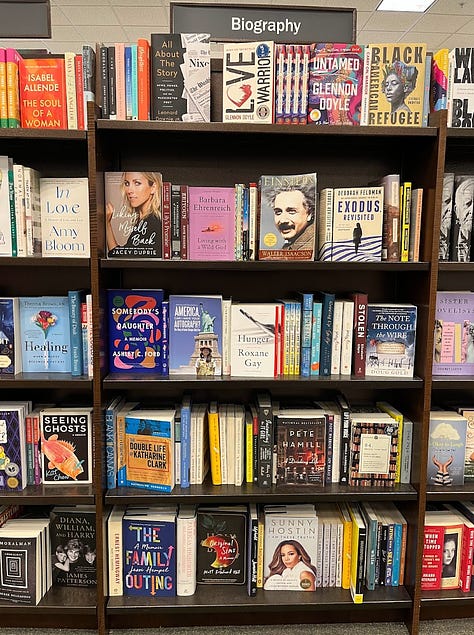

And thanks to everyone who keeps posting and sharing and sending photos of the book in the wild, from Montauk to Montreal!

LOVED this post Jessi!! Thanks :-)